Introduction

The automotive industry stands at the precipice of a revolutionary transformation, driven by the relentless pursuit of safer, smarter, and more autonomous mobility solutions. At the heart of this evolution lies sensing technology, which serves as the digital nervous system of modern vehicles. Among the constellation of sensors that enable advanced driver assistance systems (ADAS) and autonomous driving capabilities, automotive millimeter-wave radar has emerged as a cornerstone technology, increasingly favored by Original Equipment Manufacturers (OEMs) worldwide.

The preference for millimeter-wave radar stems from its exceptional reliability, precision, and robust performance across diverse environmental conditions. Unlike optical sensors that struggle in adverse weather, radar systems maintain consistent operation regardless of lighting conditions, precipitation, or atmospheric visibility. This reliability makes them indispensable for safety-critical applications where consistent performance can mean the difference between accident avoidance and catastrophic failure.

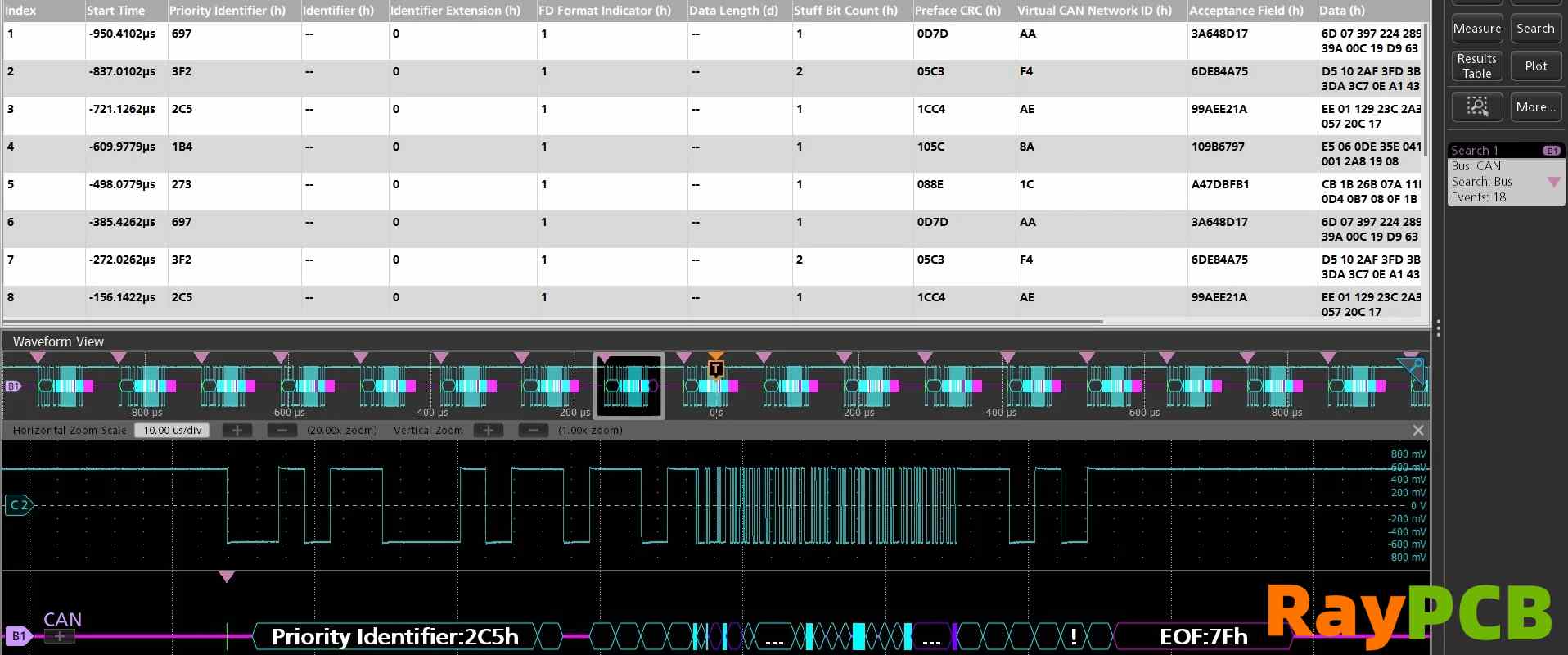

However, as ADAS systems evolve toward greater sophistication and autonomous driving capabilities advance, the data throughput requirements from radar sensors have grown exponentially. Traditional communication protocols, while adequate for earlier generations of automotive electronics, are increasingly strained by the bandwidth demands of modern radar systems. This challenge has catalyzed the development and adoption of CAN XL (Controller Area Network eXtended Length), a next-generation communication protocol that promises to bridge the gap between current capabilities and future requirements.

This comprehensive analysis explores the technical advantages of CAN XL over traditional CAN FD (CAN with Flexible Data-Rate) communication technology specifically in millimeter-wave radar applications, examining not only the immediate benefits but also the long-term implications for automotive system architecture and performance optimization.

1. Technical Advantages and Evolution of Millimeter-Wave Radar

The Multi-Sensor Ecosystem

Contemporary ADAS implementations represent sophisticated multi-sensor ecosystems, integrating cameras for visual perception, LiDAR for high-resolution 3D mapping, ultrasonic sensors for close-proximity detection, and millimeter-wave radar for robust all-weather sensing. Each sensor type contributes unique capabilities to the overall perception system, but millimeter-wave radar occupies a particularly crucial niche due to its distinctive operational characteristics.

Unparalleled All-Weather Reliability

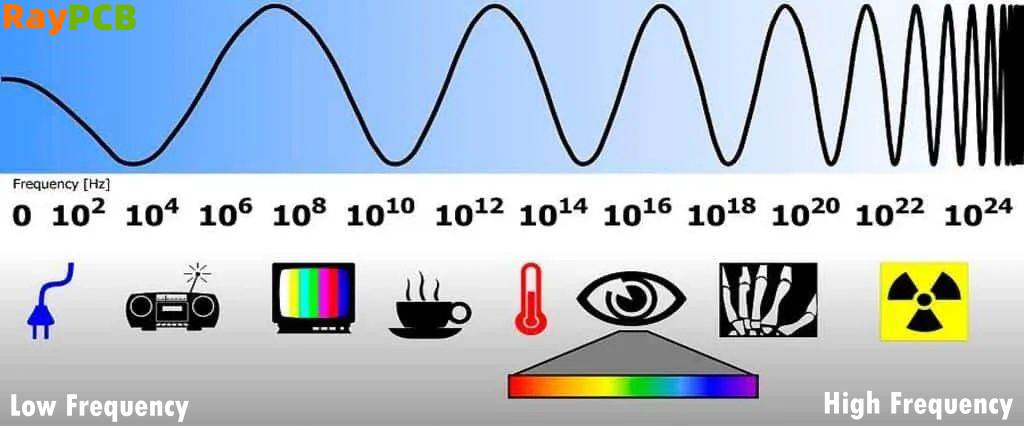

The fundamental physics underlying millimeter-wave radar operation provides inherent advantages in challenging environmental conditions. Operating in the 77 GHz frequency band, these systems transmit electromagnetic waves that exhibit minimal attenuation when traversing atmospheric moisture, dust particles, or other environmental obstacles that severely degrade optical sensors. Unlike cameras, which become virtually useless in dense fog or heavy precipitation, or LiDAR systems that suffer significant range reduction in adverse weather, millimeter-wave radar maintains consistent detection capabilities across the full spectrum of weather conditions encountered in real-world driving scenarios.

This reliability extends beyond mere functionality to encompass consistent performance characteristics. While camera-based systems may experience varying levels of degradation depending on the severity of weather conditions, radar systems maintain stable detection ranges, resolution, and accuracy regardless of environmental factors. This predictable performance is crucial for safety-critical applications where system behavior must be deterministic and reliable.

Enhanced Detection Capabilities and Resolution

The evolution to 77 GHz millimeter-wave radar represents a significant advancement over earlier 24 GHz systems. The higher frequency enables substantially improved angular resolution, allowing for more precise object localization and enhanced ability to distinguish between closely spaced targets. This improved resolution translates directly into better object classification capabilities, enabling systems to differentiate between pedestrians, cyclists, vehicles, and stationary objects with greater accuracy.

The extended detection range capabilities of modern 77 GHz systems enable earlier threat detection and longer decision-making windows for autonomous systems. Long-range detection is particularly crucial for highway applications, where high-speed scenarios require maximum advance warning to execute safe maneuvers. Current generation systems can reliably detect and track objects at distances exceeding 200 meters, providing sufficient time for complex decision-making processes in high-speed scenarios.

Superior Penetration and Environmental Adaptability

Beyond weather immunity, millimeter-wave radar demonstrates remarkable penetration capabilities that extend its utility beyond conventional sensing applications. The ability to detect objects through fog, dust, smoke, and even certain solid materials provides unique advantages in complex driving environments. For instance, radar can detect vehicles obscured by dust clouds on unpaved roads, or identify obstacles through light vegetation that would completely block optical sensors.

This penetration capability also enables innovative applications such as through-bumper mounting, where radar sensors can be completely hidden behind vehicle body panels without performance degradation. This integration flexibility allows automotive designers to maintain aesthetic integrity while providing comprehensive sensor coverage.

Economic and Practical Considerations

From a practical deployment perspective, millimeter-wave radar offers compelling economic advantages compared to alternative sensing technologies. While LiDAR systems currently command premium prices that limit their deployment to luxury vehicles, millimeter-wave radar achieves an optimal balance between cost and performance that makes it viable for mass-market applications. The manufacturing processes for radar sensors have matured significantly, enabling economies of scale that further enhance their cost-effectiveness.

Additionally, the robust nature of radar sensors reduces maintenance requirements and extends operational lifespans compared to more delicate optical systems. This reliability translates into lower total cost of ownership and improved customer satisfaction through reduced service interventions.

2. The Data Revolution: Understanding Radar Output Growth

Data Generation and Structure

Modern millimeter-wave radar systems generate sophisticated real-time data streams that provide comprehensive environmental perception capabilities. These systems typically output data in two primary formats: point clouds that represent raw detection data, and object lists that contain processed information about tracked targets. Each format serves specific purposes within the broader ADAS architecture and places distinct demands on communication infrastructure.

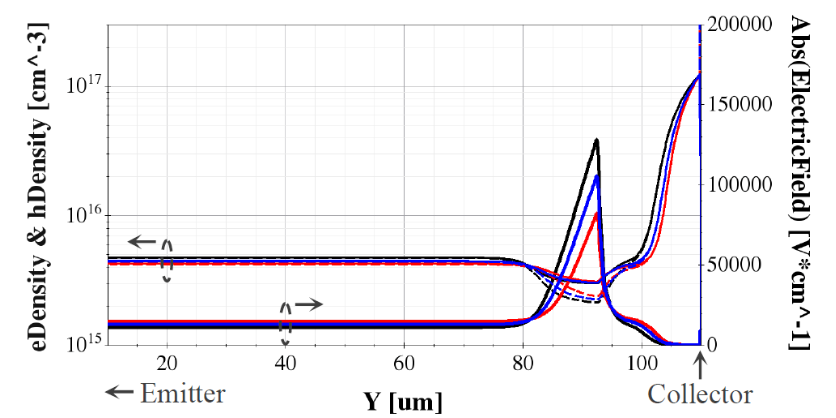

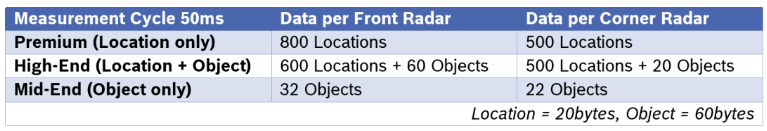

Point cloud data represents the fundamental output of radar signal processing, containing individual detection points with associated metadata including range, relative velocity, angle of arrival, and signal strength information. A single radar sensor can generate hundreds to thousands of these detection points per measurement cycle, with typical refresh rates of 50 milliseconds ensuring real-time environmental updates.

Object list data represents a higher level of processing, where individual detection points are clustered, tracked, and classified into discrete objects. Each object entry contains comprehensive information including position coordinates, velocity vectors, acceleration estimates, object dimensions, classification confidence levels, and unique tracking identifiers that enable consistent object following across multiple measurement cycles.

Factors Driving Bandwidth Growth

The exponential growth in radar data output stems from multiple converging trends in automotive technology development. Advanced ADAS implementations increasingly require finer-grained object detection and classification capabilities to make sophisticated driving decisions. Where earlier systems might simply detect the presence of an object, modern implementations must distinguish between pedestrians, cyclists, motorcycles, passenger cars, commercial vehicles, and various types of roadside infrastructure.

This enhanced classification capability necessitates more detailed radar signatures, requiring higher resolution data and more sophisticated processing algorithms. The resulting data volume growth places increasing strain on communication systems that were designed for earlier generations of sensors with more modest bandwidth requirements.

Furthermore, the trend toward faster safety response times drives the need for higher-frequency data updates. Critical safety functions such as Automatic Emergency Braking (AEB), Pedestrian Collision Warning (PCW), and Lane Departure Warning (LDW) systems require minimal latency between threat detection and response activation. Achieving these response times requires not only faster sensor processing but also higher-speed communication links to minimize data transmission delays.

Next-Generation Radar Technologies

The emergence of 4D imaging radar technology represents the next evolutionary step in automotive radar development. Unlike conventional radar systems that provide range, velocity, and azimuth information, 4D systems add elevation detection capabilities, creating comprehensive three-dimensional environmental maps with velocity information for each detected point. This additional dimension significantly increases data volume while providing enhanced object classification and environmental understanding capabilities.

The integration of artificial intelligence and machine learning algorithms into radar processing systems further amplifies data requirements. AI-driven sensor fusion systems require access to raw or minimally processed sensor data to optimize environmental perception models. These systems consume substantially more bandwidth than traditional rule-based processing approaches but offer significantly enhanced performance in complex scenarios.

3. CAN XL: The Next Generation Communication Solution

Evolution of CAN Technology

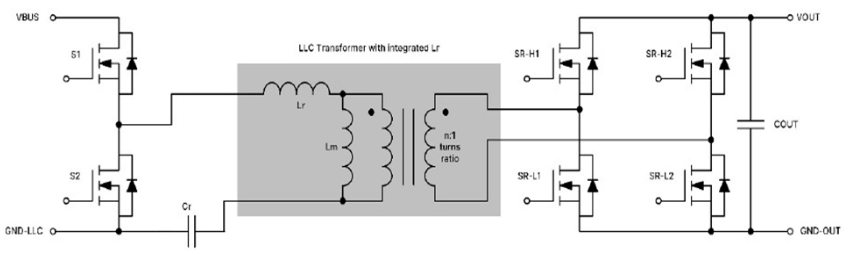

The Controller Area Network (CAN) protocol has served as the backbone of automotive communication systems for decades, evolving through multiple generations to meet changing industry requirements. The progression from Classic CAN through CAN FD to CAN XL represents a continuous refinement process, with each generation addressing specific limitations while maintaining backward compatibility and preserving the fundamental strengths that made CAN successful in automotive applications.

CAN XL represents the third generation of this evolutionary process, incorporating lessons learned from previous implementations while addressing the specific challenges posed by modern high-bandwidth applications. The protocol maintains the robust error handling, deterministic behavior, and cost-effective implementation characteristics that made its predecessors successful while dramatically expanding performance capabilities.

Technical Innovations in CAN XL

The most significant advancement in CAN XL is the expansion of maximum payload size from the 64-byte limit of CAN FD to 2048 bytes per frame. This eight-fold increase in payload capacity fundamentally changes the efficiency characteristics of data transmission, particularly for applications that generate large data blocks such as radar point clouds or compressed sensor data.

Beyond payload expansion, CAN XL incorporates enhanced security features designed to address the growing cybersecurity concerns in connected vehicles. These security enhancements include improved error detection mechanisms, enhanced frame authentication capabilities, and provisions for encryption integration that help protect critical vehicle systems from malicious attacks.

The protocol also introduces functional safety improvements that align with the stringent reliability requirements of ADAS applications. Enhanced fault detection and isolation capabilities ensure that communication errors are quickly identified and contained, preventing the propagation of corrupted data that could compromise safety-critical decision-making processes.

Architectural Flexibility and Implementation Options

CAN XL provides unprecedented architectural flexibility through its support for mixed-network implementations. Systems can combine CAN FD and CAN XL nodes within the same network, operating at speeds up to 8 Mbit/s while maintaining full compatibility. This capability enables automotive manufacturers to implement gradual migration strategies, upgrading high-bandwidth nodes to CAN XL while maintaining existing CAN FD infrastructure for lower-bandwidth applications.

For applications requiring maximum performance, pure CAN XL networks can achieve communication speeds up to 20 Mbit/s, providing substantial bandwidth increases over previous generation protocols. This high-speed capability is particularly valuable for applications such as radar sensor networks where multiple high-bandwidth sensors must share common communication infrastructure.

4. Performance Analysis: CAN FD vs. CAN XL

Quantitative Performance Comparisons

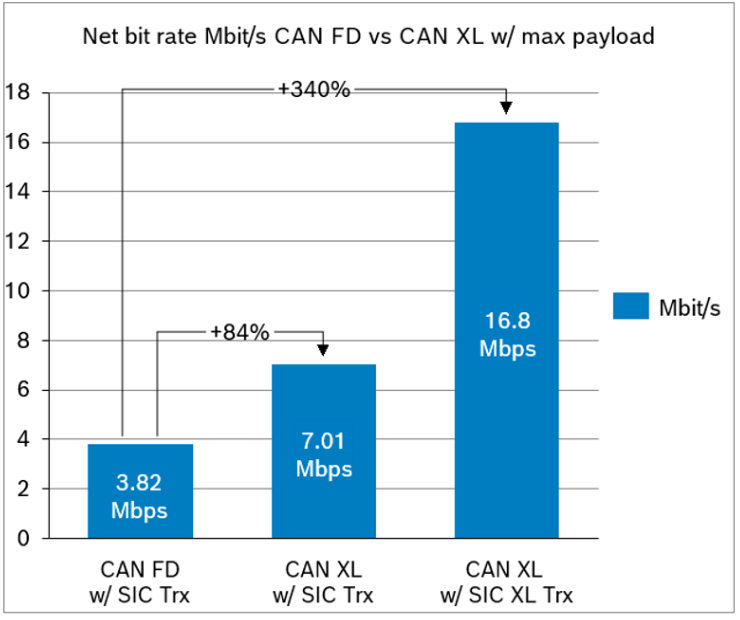

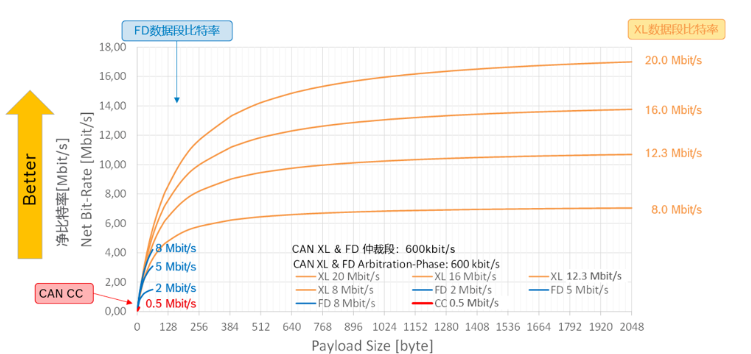

Comprehensive analysis of communication efficiency reveals substantial advantages for CAN XL implementations across multiple performance metrics. When comparing systems operating at equivalent 8 Mbit/s speeds, CAN XL achieves 84% higher net bitrate compared to CAN FD implementations using CAN SIC transceivers. This improvement stems primarily from the increased payload efficiency enabled by larger frame sizes, which amortize protocol overhead across more user data.

The performance advantage becomes even more pronounced when leveraging CAN XL’s maximum speed capabilities. Comparing CAN XL at 20 Mbit/s against CAN FD at 8 Mbit/s reveals a 340% increase in net bitrate, representing a transformational improvement in communication capacity. This dramatic performance increase enables entirely new classes of applications that would be impossible with previous generation protocols.

Practical Implications for Radar Applications

These performance improvements translate directly into enhanced radar system capabilities and improved overall vehicle performance. Higher bandwidth availability enables radar sensors to transmit more detailed environmental data, supporting enhanced object classification and tracking capabilities. The reduced latency achievable with higher-speed communication also enables faster safety response times, directly improving vehicle safety performance.

The increased bandwidth also provides headroom for future capability expansion without requiring communication system redesign. As radar sensors continue to evolve toward higher resolution and more sophisticated processing capabilities, CAN XL provides the communication infrastructure necessary to support these advances.

5. System Architecture Analysis: Five-Radar Implementation Scenarios

Premium and High-End Vehicle Configurations

Premium and high-end vehicle implementations typically deploy five millimeter-wave radar sensors in a comprehensive coverage pattern, including one forward-looking long-range radar and four corner-mounted medium-range radars providing 360-degree environmental awareness. These configurations generate substantial data volumes that challenge traditional communication architectures.

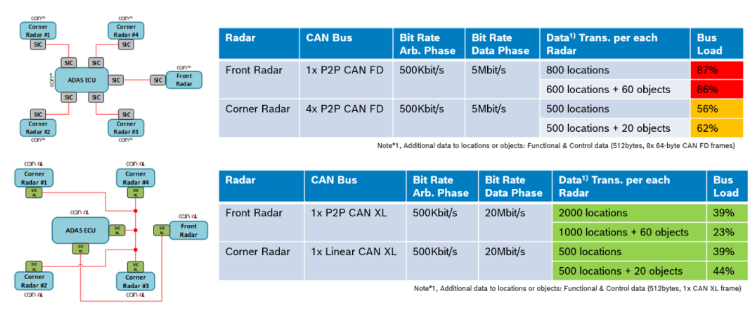

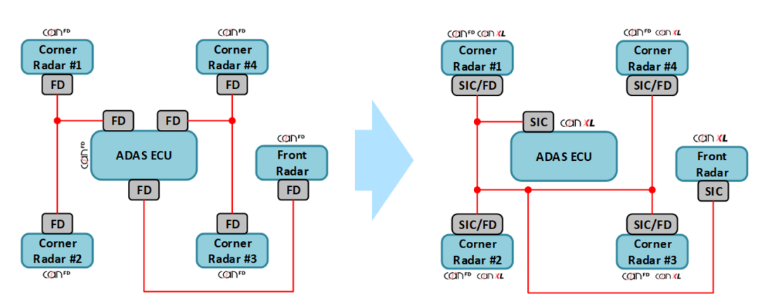

Current CAN FD implementations for these scenarios typically require five point-to-point communication buses, one dedicated to each radar sensor. Operating at 5 Mbit/s, these implementations experience bus loading levels exceeding 50%, with some configurations reaching 87% capacity utilization. Such high loading levels are impractical for production deployment due to insufficient margin for data volume growth and potential timing violations under peak loading conditions.

CAN XL enables dramatic architectural simplification and performance improvement for these demanding applications. A two-bus architecture utilizing one point-to-point connection for the front radar and one linear bus serving all four corner radars can handle equivalent data loads at only 40% capacity utilization when operating at 20 Mbit/s. This configuration provides substantial headroom for future capability expansion while reducing system complexity.

The economic benefits of CAN XL implementation in premium scenarios are substantial. Reducing the number of required communication buses from five to two decreases external component requirements by approximately 60%, including reductions in transceiver quantities, electromagnetic compatibility (EMC) filters, connectors, and associated wiring harnesses. These component savings translate directly into reduced manufacturing costs and simplified assembly processes.

Mid-End Vehicle Optimizations

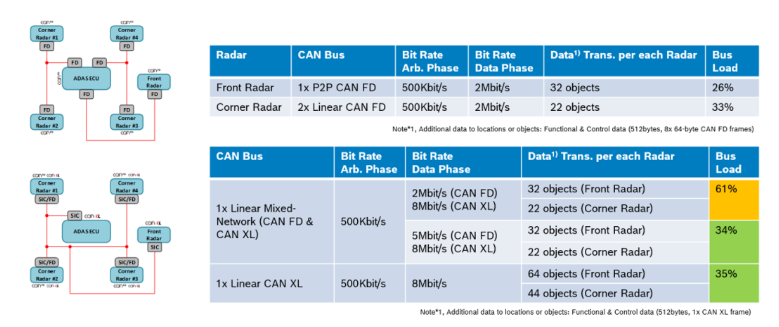

Mid-end vehicle implementations present different optimization opportunities where mixed-network approaches can provide incremental improvements while maintaining cost competitiveness. These scenarios typically begin with three-bus CAN FD architectures and can benefit from selective CAN XL upgrades that provide performance improvements without requiring complete system redesign.

Mixed CAN FD and CAN XL implementations operating at 2 Mbit/s and 8 Mbit/s respectively can achieve significant bus load reductions while maintaining compatibility with existing system components. Further optimization through speed increases to 5 Mbit/s CAN FD and 8 Mbit/s CAN XL can achieve 34% bus loading, providing excellent performance margins.

Full CAN XL implementations at 8 Mbit/s maintain 35% bus loading even with doubled data volume, providing substantial growth capability for future feature additions. This headroom is crucial for mid-market vehicles where feature content continues to expand but cost pressures remain significant.

Conclusion and Future Outlook

The analysis presented demonstrates compelling advantages for CAN XL implementation in automotive millimeter-wave radar applications. The combination of dramatically increased payload capacity, enhanced communication speeds, and architectural flexibility positions CAN XL as the optimal communication solution for current and future radar system requirements.

As millimeter-wave radar technology continues advancing toward higher resolution, enhanced object classification, and integration with artificial intelligence processing systems, the bandwidth requirements will continue growing exponentially. CAN XL provides the communication infrastructure necessary to support these advances while maintaining the cost-effectiveness and reliability that automotive applications demand.

The transition to CAN XL represents more than a simple protocol upgrade; it enables entirely new classes of automotive applications and capabilities that were previously impossible due to communication bandwidth limitations. As the technology matures and achieves widespread adoption, CAN XL is positioned to become the standard communication interface for next-generation ADAS implementations, supporting the industry’s continued evolution toward fully autonomous mobility solutions.