Printed circuit board (PCB) design has become increasingly sophisticated and critical to product success. As devices become smaller, faster, and more complex, optimizing your PCB manufacturing layout is essential for achieving reliable performance, cost-effectiveness, and manufacturability. Whether you’re designing consumer electronics, industrial equipment, or cutting-edge IoT devices, implementing strategic layout optimization techniques can mean the difference between a successful product launch and costly redesigns.

Effective PCB layout optimization goes beyond simply fitting components onto a board. It requires a comprehensive understanding of electrical principles, manufacturing constraints, thermal management, and signal integrity considerations. The following five key strategies will help you create PCB layouts that not only meet your functional requirements but also optimize manufacturing efficiency, reduce costs, and improve overall product reliability.

Strategy 1: Design for Manufacturing (DFM) Principles

Design for Manufacturing represents the foundation of successful PCB layout optimization. This approach involves making design decisions that simplify the manufacturing process while maintaining product functionality and performance. By considering manufacturing constraints early in the design phase, you can avoid costly revisions and production delays.

The cornerstone of DFM in PCB design is understanding your manufacturer’s capabilities and limitations. Different fabrication houses have varying specifications for minimum trace widths, via sizes, spacing requirements, and layer stackup options. Establishing these parameters before beginning your layout ensures your design will be manufacturable without requiring expensive custom processes or premium pricing.

Trace width and spacing optimization plays a crucial role in DFM implementation. While it might be tempting to use the minimum allowable dimensions to maximize routing density, this approach often leads to manufacturing challenges and reduced yields. Instead, use wider traces and larger spacing wherever possible, reserving minimum dimensions only for areas where space constraints absolutely require them. This approach improves manufacturing reliability and often reduces costs by allowing the use of standard fabrication processes.

Component placement strategy significantly impacts manufacturing efficiency. Grouping components by type and size facilitates automated assembly processes and reduces setup times. Orient components consistently to minimize machine programming complexity and reduce the likelihood of placement errors. Consider the assembly sequence when placing components, ensuring that larger components don’t shadow smaller ones during reflow soldering or wave soldering processes.

Via design and placement require careful consideration for optimal manufacturability. Use standard via sizes whenever possible, as non-standard dimensions require special tooling and increase costs. Minimize the number of different via sizes in your design to reduce manufacturing complexity. Place vias away from component pads to avoid interference during assembly and to prevent solder wicking issues that can lead to poor joints.

Panelization strategy should be considered from the beginning of your layout process. Design your PCB dimensions and shape to optimize panel utilization, reducing waste and lowering per-unit costs. Include appropriate tooling holes, fiducial markers, and handling features that facilitate automated manufacturing processes. Consider how individual boards will be separated from the panel, ensuring that the separation method won’t damage critical circuits or components.

Strategy 2: Signal Integrity and EMI Optimization

Modern electronic devices operate at increasingly high frequencies, making signal integrity and electromagnetic interference (EMI) control critical aspects of PCB layout optimization. Poor signal integrity can lead to timing errors, data corruption, and system failures, while inadequate EMI control can cause regulatory compliance issues and interference with other devices.

Ground plane strategy forms the backbone of effective signal integrity design. Implement continuous ground planes wherever possible, as they provide low-impedance return paths for high-frequency signals and help control EMI. When ground plane splits are unavoidable, ensure that high-speed signals don’t cross these discontinuities, as this can cause signal reflections and increased electromagnetic emissions. Use stitching vias to connect ground planes on different layers, maintaining low impedance paths throughout your stackup.

Controlled impedance routing becomes essential when dealing with high-speed digital signals or sensitive analog circuits. Work with your fabricator to establish appropriate trace geometries for your target impedance values, considering factors such as dielectric constant, trace width, thickness, and the distance to reference planes. Maintain consistent impedance along signal paths by avoiding unnecessary vias, sharp bends, or changes in trace width. For differential pairs, maintain tight coupling and consistent spacing to preserve signal quality.

Power distribution network (PDN) design significantly impacts both signal integrity and EMI performance. Implement a low-impedance power distribution system using dedicated power planes, wide traces, and strategically placed decoupling capacitors. Place decoupling capacitors as close as possible to power pins of active components, with different capacitor values targeting various frequency ranges. Consider the inductance of vias and traces when connecting decoupling capacitors to power and ground planes.

Layer stackup optimization provides the foundation for controlled impedance and EMI management. Work with your fabricator to develop a stackup that supports your impedance requirements while providing adequate power and ground plane coverage. Alternate signal layers with reference planes to provide consistent return paths and minimize crosstalk. Consider using thinner dielectrics for better high-frequency performance, but balance this against manufacturing complexity and cost.

Routing topology and techniques play a crucial role in maintaining signal integrity. Keep high-speed traces as short as possible and avoid unnecessary vias that introduce discontinuities. Route critical signals on inner layers when possible, as they benefit from better shielding and more consistent impedance. Implement proper termination strategies for high-speed signals, using series termination for point-to-point connections and parallel termination for longer lines or multiple loads.

Strategy 3: Thermal Management and Reliability Enhancement

Thermal management has become increasingly critical as component densities increase and power dissipation rises in modern electronic designs. Effective thermal design not only prevents component damage but also improves reliability, extends operating life, and maintains consistent performance across temperature variations.

Heat dissipation pathway design requires careful consideration of thermal flow from heat-generating components to heat sinks or ambient air. Identify components with significant power dissipation and create efficient thermal paths using copper planes, thermal vias, and strategic component placement. Large copper areas act as heat spreaders, distributing thermal energy over a wider area to facilitate cooling. Connect these copper areas to internal planes when possible to increase the thermal mass available for heat absorption.

Thermal via implementation provides crucial vertical heat conduction paths in multilayer PCBs. Place arrays of thermal vias directly beneath high-power components to conduct heat away from the component and into internal copper layers or the opposite side of the board. Use multiple small vias rather than fewer large ones to maximize heat transfer while minimizing impact on routing density. Consider filled vias for applications requiring maximum thermal performance, though this adds manufacturing cost and complexity.

Component placement strategy for thermal management involves distributing heat sources across the board rather than concentrating them in one area. Separate high-power components when possible to prevent hot spots that can lead to thermal runaway or accelerated aging. Place temperature-sensitive components away from heat sources and in areas with good airflow or thermal coupling to heat sinks. Consider the thermal coefficient of critical components when placing them relative to heat sources.

Copper balance and symmetry affect both thermal performance and mechanical reliability. Maintain similar copper distribution on opposite sides of the board to prevent warping during assembly and temperature cycling. Use copper fills in unused areas to improve heat spreading and reduce thermal gradients across the board. However, ensure that these fills don’t create EMI issues or interfere with impedance control requirements.

Solder joint reliability depends heavily on thermal design, as thermal cycling creates mechanical stress in solder joints. Minimize temperature differences between components and the PCB substrate by providing good thermal coupling. Consider the coefficient of thermal expansion mismatch between components and the PCB material, especially for large components or those with different package materials. Implement thermal relief connections for components that need to be soldered to large copper areas, as this prevents the copper from acting as a heat sink during soldering.

Strategy 4: Power Integrity and Distribution Optimization

Power integrity has become as critical as signal integrity in modern PCB design, particularly with the trend toward lower supply voltages and higher current requirements. Poor power distribution can lead to voltage droops, increased noise, and system instability, while optimized power delivery ensures reliable operation across all operating conditions.

Power distribution network architecture requires careful planning to deliver clean, stable power to all components. Implement dedicated power planes for major supply voltages, as these provide low impedance distribution and help reduce power supply noise. For multi-voltage designs, carefully plan power plane splits to minimize the impact on signal return paths while maintaining power integrity for each supply domain. Consider using separate layers for different voltage levels to avoid conflicts and simplify routing.

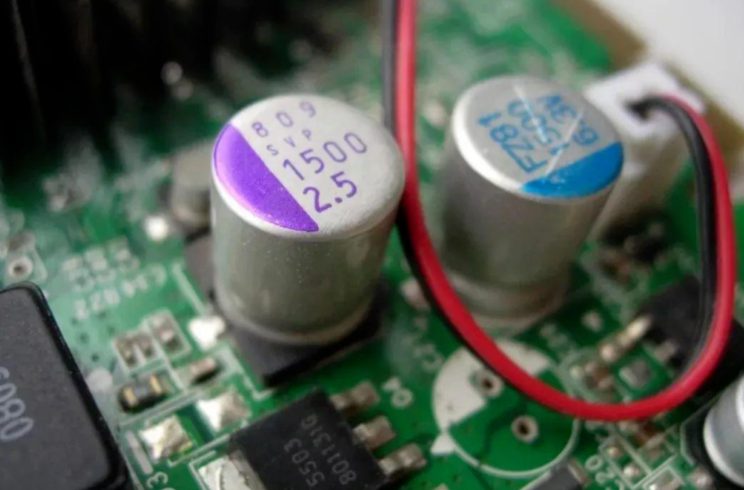

Decoupling capacitor strategy plays a fundamental role in power integrity optimization. Implement a hierarchical decoupling approach using multiple capacitor values to address different frequency ranges. Large electrolytic or tantalum capacitors handle low-frequency variations and provide bulk charge storage, while smaller ceramic capacitors address high-frequency switching noise. Place high-frequency decoupling capacitors as close as possible to the power pins of active components to minimize loop inductance.

Current density management ensures reliable power delivery without excessive voltage drops or heating. Calculate current requirements for each section of your power distribution network and size traces and planes accordingly. Use current density guidelines appropriate for your application, considering factors such as temperature rise, trace length, and copper thickness. Implement multiple parallel paths for high-current supplies to distribute the current load and improve reliability.

Voltage regulation placement and routing significantly impact power integrity performance. Place voltage regulators close to their load circuits to minimize resistive losses and improve transient response. Route high-current paths using wide traces or copper pours, and avoid routing power supplies through connector pins or narrow sections that could create bottlenecks. Consider the impact of switching regulator layouts on EMI and include appropriate filtering and shielding techniques.

Power supply sequencing and protection features should be considered during the layout phase. Some components require specific power-up sequences or have maximum voltage differential requirements between supplies. Plan your power distribution to support these requirements through proper decoupling, sequencing circuits, and protection components. Include test points for critical power supplies to facilitate debugging and production testing.

Strategy 5: Automation and Advanced Layout Techniques

Modern PCB design tools offer sophisticated automation features that can significantly improve layout quality while reducing design time. Understanding and effectively utilizing these advanced techniques can help you achieve optimal results while maintaining design consistency and meeting complex requirements.

Auto-routing and interactive routing tools have evolved significantly, offering intelligent routing capabilities that consider multiple constraints simultaneously. Modern auto-routers can handle complex rules including impedance control, length matching, and EMI requirements while optimizing routing density and minimizing layer usage. However, successful automation requires careful constraint setup and rule definition. Critical signals often benefit from manual routing or guided auto-routing to ensure optimal performance.

Length matching and timing analysis become crucial in high-speed designs where signal timing directly impacts functionality. Utilize your design tools’ length matching capabilities to equalize trace lengths within specified tolerances for clock distribution, data buses, and differential pairs. Advanced tools can perform timing analysis during the layout process, allowing real-time optimization of critical timing paths. Consider the impact of via delays and trace bends when calculating timing requirements.

Design rule checking (DRC) and constraint management ensure that your layout meets all electrical, mechanical, and manufacturing requirements. Establish comprehensive design rules early in the project, including manufacturing constraints, electrical requirements, and company standards. Use hierarchical constraint systems that allow different rules for different circuit sections or signal classes. Perform regular DRC checks throughout the design process rather than waiting until completion to identify and resolve issues.

3D modeling and mechanical integration capabilities help identify potential mechanical conflicts before manufacturing. Use 3D visualization tools to verify component clearances, connector accessibility, and mechanical fit within enclosures. Consider the impact of component tolerances and PCB warpage on final assembly. Integrate mechanical constraints into your electrical design tools to catch conflicts early in the design process.

Collaboration and version control features become essential for team-based designs or projects requiring multiple iterations. Implement proper version control procedures to track design changes and maintain design history. Use collaborative design features that allow multiple designers to work on different sections simultaneously while maintaining design integrity. Establish clear documentation standards and change control procedures to ensure that all team members understand design requirements and modifications.

Conclusion

Optimizing your PCB manufacturing layout requires a comprehensive approach that balances electrical performance, manufacturing efficiency, thermal management, and design automation. By implementing these five key strategies – Design for Manufacturing principles, signal integrity optimization, thermal management, power integrity enhancement, and advanced layout techniques – you can create PCB designs that meet performance requirements while minimizing costs and maximizing reliability.

Success in PCB layout optimization comes from understanding the interdependencies between these various aspects of design. Manufacturing constraints influence electrical performance, thermal considerations affect component placement, and power integrity impacts signal quality. By considering these relationships early in the design process and applying systematic optimization techniques, you can achieve superior results that meet both technical and business objectives.

The investment in proper PCB layout optimization pays dividends throughout the product lifecycle, from reduced development time and manufacturing costs to improved field reliability and customer satisfaction. As electronic systems continue to evolve toward higher performance and greater integration, mastering these optimization strategies becomes increasingly valuable for design success.